Should we fear killer robots?

(CNN)Physicist Stephen Hawking recently warned of the dangers of artificial intelligence and “powerful autonomous weapons.” Autonomous technology is racing forward, but international discussions on managing the potential risks are already underway.

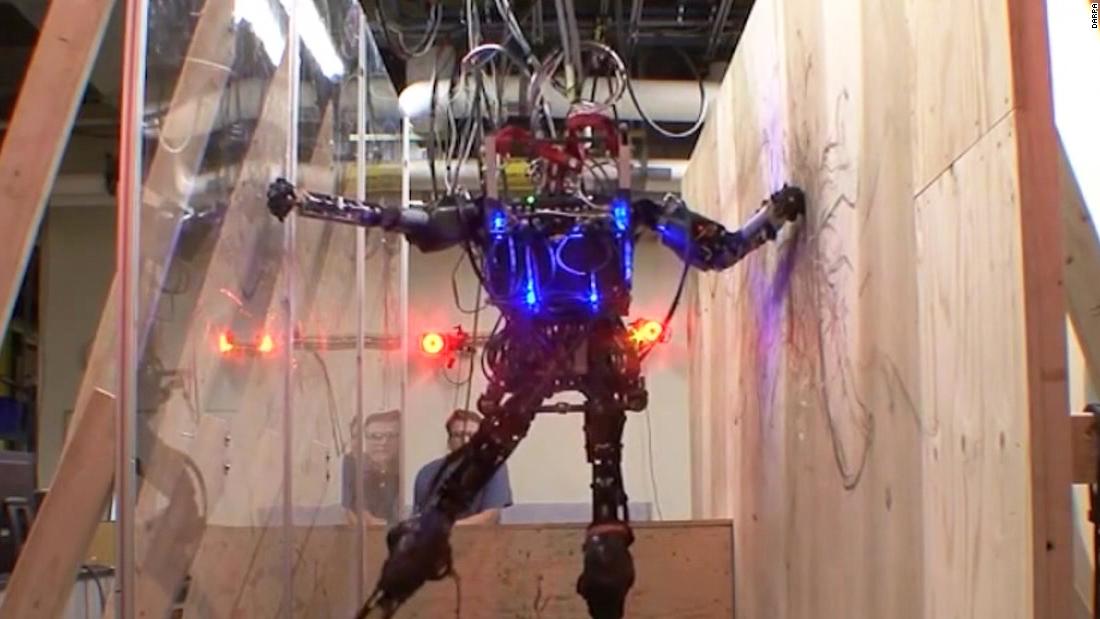

This week, nations enter the fourth year of international discussions at the United Nations on lethal autonomous weapons, or what some have called “killer robots.” The UN talks are oriented on future weapons, but simple automated weapons to shoot down incoming missiles have been widely used for decades.

The same computer technology that powers self-driving cars could be used to power intelligent, autonomous weapons.

[AdSense-A]

Recent advances in machine intelligence are enabling more advanced weapons that could hunt for targets on their own. Earlier this year, Russian arms manufacturer Kalashnikov announced it was developing a “fully automated combat module” based on neural networks that could allow a weapon to “identify targets and make decisions.”

Whether or not Kalashnikov’s claims are true, the underlying technology that will enable self-targeting machines is coming.

Machine image classifiers, using neural networks, have been able to beat humans at some benchmark image recognition tests. Machines also excel at situations requiring speed and precision.

These advantages suggest that machines might be able to outperform humans in some situations in war, such as quickly determining whether a person is holding a weapon. Machines can also track human body movements and may even be able to catch potentially suspicious activity, such as a person reaching for what could be a concealed weapon, faster and more reliably than a human.

Machine intelligence currently has many weaknesses, however. Neural networks are vulnerable to a form of spoofing attack (sending false data) that can fool the network. Fake “fooling images” can be used to manipulate image classifying systems into believe one image is another, and with very high confidence.

Moreover, these fooling images can be secretly embedded inside regular images in a way that is undetectable to humans. Adversaries don’t need to know the source code or training data a neural network uses in order to trick the network, making this a troubling vulnerability for real-world applications of these systems.

More generally, machine intelligence today is brittle and lacks the robustness and flexibility of human intelligence. Even some of the most impressive machine learning systems, such as DeepMind’s AlphaGo, are only narrowly intelligent. While AlphaGo is far superior to humans at playing the ancient Chinese game Go, reportedly its performance drops off significantly when playing on a differently sized board than the standard 19×19 Go board it learned on.

The brittleness of machine intelligence is a problem in war, where “the enemy gets a vote” and can deliberately try to push machines beyond the bounds of their programming. Humans are able to flexibly adapt to novel situations, an important advantage on the battlefield.

Humans are also able to understand the moral consequences of war, which machines cannot even remotely approximate today. Many decisions in war do not have easy answers and require weighing competing values.

As an Army Ranger who fought in Iraq and Afghanistan, I faced these situations myself. Machines cannot weigh the value of a human life. The vice chairman of the US Joint Chiefs of Staff, Gen. Paul Selva, has repeatedly highlighted the importance of maintaining human responsibility over the use of force. In July of this year, he told the Senate Armed Services Committee, “I don’t think it’s reasonable for us to put robots in charge of whether or not we take a human life.”

Join us on Twitter and Facebook

The challenge for nations will be to find ways to harness the benefits of automation, particularly its speed and precision, without sacrificing human judgment and moral responsibility.

There are many ways in which incorporating more automation and intelligence into weapons could save lives.

At the same time, nations will want to do so without giving up the robustness, flexibility and moral decision-making that humans bring. There are no easy answers for how to balance human and machine decision-making in weapons.

Some military scenarios will undoubtedly require automation, as is already the case today. At the same time, some decision-making in war requires weighing competing values and applying judgment. For now at least, these are uniquely human abilities.

Read more: http://www.cnn.com/2017/11/14/opinions/ai-killer-robots-opinion-scharre/index.html